TwoYearsWithTruffle

From APIDesign

(New page: In May 2015, when I joined OracleLabs and got a task to turn Truffle from a research system for writing fast AST interpreters into an industry ready framework, I wrote an essay...) |

(→Runtime Sharing of ASTs) |

||

| (41 intermediate revisions not shown.) | |||

| Line 7: | Line 7: | ||

It was clear what [[I]] had to do: design a system of registering [[Truffle]] [[language]]s in a [[Declarative_Programming|declarative way]] and initializing them in a uniform style. That was great, as my previous [[NetBeans_Runtime_Container]] [[API]] experience was all about [[Lookup|registering and discovery]] of some services. | It was clear what [[I]] had to do: design a system of registering [[Truffle]] [[language]]s in a [[Declarative_Programming|declarative way]] and initializing them in a uniform style. That was great, as my previous [[NetBeans_Runtime_Container]] [[API]] experience was all about [[Lookup|registering and discovery]] of some services. | ||

| - | I just had to decide whether it is better to fit into an existing | + | I just had to decide whether it is better to fit into an existing standard - e.g. implement {{JDK|javax/script|ScriptEngine}} [[API]] - or whether it is better to design something from scratch. The dilemma is always the same - is it better to follow the standard and compromise on the things that don't fit in there (in the case mostly [[polyglot]] features) or design a new [[API]] that would match our needs 100%? Given the fact that [[polyglot]] features were the most important selling point, [[I]] went for a new [[API]]. That was probably good choice, but the missing support for {{JDK|javax/script|ScriptEngine}} is still biting us from time to time and there is an open issue to provide such generic {{JDK|javax/script|ScriptEngine}} implementation. |

| - | Anyway {{Truffle|com/oracle/truffle/api|TruffleLanguage}} and {{Truffle|com/oracle/truffle/api/vm | + | Anyway {{Truffle|com/oracle/truffle/api|TruffleLanguage}} and {{Truffle|com/oracle/truffle/api/vm|PolyglotEngine}} were created and all Matthias's interop tests could be rewritten to use just these [[API]]s and don't talk to each [[language]] directly. [[Good]] example that [[design as a service]] can work. |

| - | + | == Testing Compatibility == | |

| - | [[ | + | Another thing that was more or less obvious was the need for compatibility between languages. There are two approaches to tackle that: |

| + | * testing from bottom | ||

| + | * testing from the top | ||

| + | Testing from the top simply means to write sample programs that mix and match languages and assert some output. Again the [https://github.com/jtulach/sieve#readme sieve project] contains examples of that approach. However this can only test the [[language]]s one knows about (e.g. one is on top the chain of dependencies and knows them all). Matthias once created a matrix of an three parts of an algorithm written in different languages and then mixed them together in all possible combinations. That is a valid approach and certainly the right one when measuring the overall performance of the system. However it is a lot of work (that should be distributed among language writers) and the coverage isn't usually equally distributed among all languages. | ||

| + | |||

| + | While [[I]] value both approaches, [[I]] incline towards testing from ''bottom'' as that is the way to test multiple implementations of an abstract [[API]]: one writes a test compatibility kit (a [[TCK]]) that contains abstract test definitions with tasks that each language author needs to ''finish'' by writing suitable code snippet in own language to perform the given task. This approach scales much better and gives consistent coverage across all language implementations. Each time we detect a deviation from the expected [[polyglot]] behavior we add a test to {{Truffle|com/oracle/truffle/api/tck|TruffleTCK}} and all the [[language]] writers automatically benefit. Once they update to newer version of the [[TCK]] they are notified and can implement the newly desired functionality - and increase compatibility between all languages. | ||

| + | |||

| + | [[I]]'d say this part of my [[Truffle]] work went fine even I still wasn't a [[Truffle]] [[Domain Expert]]. | ||

| + | |||

| + | == Polishing Interop == | ||

| + | |||

| + | [[Image:Truffle-interop-original.png|thumb|left|Original Interop API]] | ||

| + | |||

| + | [[Truffle]] [[polyglot]] capabilities are based on so called ''interop'' [[API]] also created by Matthias. My task was to polish it. That required me to gain some [[Domain Expert]] knowledge, but not that much as the ''interop'' is a kind of isolated part of [[Truffle]] system that can be (to some extent) understood on its own. Moreover most of the work was in applying abstractions and hiding stuff that didn't have to be exposed as an [[API]]. Compare the original version | ||

| + | |||

| + | with the polished one. Instead of almost twenty classes split into five packages, there are just five classes in a single package. Just these five abstractions were enough to provide the same functionality - this is the kind of service you can expect when asking someone to [[design API as a service]]. | ||

| + | |||

| + | [[Image:Truffle-interop-polished.png|thumb|right|Simplified Interop API]] | ||

| + | |||

| + | [[I]] was so proud that I even presented my result at a conference. Matthias was listening to my speech and felt ashamed. But there was no reason - I had kept 99% of original Matthias code - I'd just hidden it. [[API]]s should act as a facade - they should hide the gory details of implementations in order to help users to stay [[clueless]] and still remain productive. There is no need to be ashamed, we all did good job (impl & [[API]] wise). | ||

| + | |||

| + | Over the next months we added more classes into the interop package to support exception handling, simplify writing of boiler plate code when resolving interop messages, etc. However it is always better to start with some smaller [[API]] and later expand it than trying to do it the opposite way. | ||

| + | |||

| + | == Debugger == | ||

| + | |||

| + | The [[Truffle]] team master of [[debugger]] related tasks was Michael Van De Vanter - author of revolutionary article ''Debugging at Full Speed'' and many others. Michael had an implementation of [[debugger]] for [[Truffle]] implementation of [[Ruby]] and [[JavaScript]] running and also the implementation for [[R]] was sort of working. However the situation was similar to Matthias [[polyglot]] attempts - each language needed its own setup to turn the [[debugger]] on. In addition to that the way to start [[debugging]] was quite different than the way to start regular execution (e.g. via {{Truffle|com/oracle/truffle/api/vm|PolyglotEngine}}). As a result one needed to decide ahead of time whether debugging will be needed or whether one wants just a plain execution. Of course this had to be unified. | ||

| + | |||

| + | After a bit of struggling I managed to reverse the dependency - e.g. one could always start the execution with {{Truffle|com/oracle/truffle/api/vm|PolyglotEngine}} and, if the [[debugger]] was initialized, debugging started automatically. Moreover I added few hooks that could be triggered via '''JPDA''' [[debugger]] protocol. Based on them Martin Entlicher (the [[NetBeans]] debugger guy) then implemented [[TruffleNetBeansDebugger]]. Since then [[NetBeans]] became able to debug any [[Truffle]] based language, step through the sources (even without the IDE understanding them), see stack variable, etc. | ||

| + | |||

| + | Of course the behavior was buggy and had to be tested. I was thinking of putting the test into [[TruffleTCK]], but except one trivial case (using [[debugger]] to kill long running execution) the tests seemed too language specific to be tested ''from bottom''. Thus I created '''SLDebugTest''' which verified that stepping over a factorial computation works in our testing ''Simple Language'', shows proper values for local variables, etc. Other teams then copied the test and adopted it to their language. As far as I can tell then the consistency of debugger implementations increased. | ||

| + | |||

| + | This all was achievable without [[Domain Expert]] knowledge of [[Truffle]]. However there were few problems that had to be addressed: | ||

| + | * The AST instrumentation API was found to need to much memory overhead and real [[Truffle]] AST expert, Christian Humer had to be called to rewrite that | ||

| + | * Initial evaluation (that talked to a debugger) was slow - after weeks of struggling and help from Christian I finally learned enough about partial evaluation tricks ({{Truffle|com/oracle/truffle/api|Assumption}}, {{Truffle|com/oracle/truffle/api|CompilerDirectives.CompilationFinal}}) to speed it up | ||

| + | * Debugger could only be attached when new {{Truffle|com/oracle/truffle/api/vm|PolyglotEngine}} evaluation was about to start - again, it needed Christian's new instrumentation API to fix that up | ||

| + | These problems show the limits of [[design API as a service]] - designing an [[API]] worked, but making it fast required a lot of understanding of what happens under the cover. | ||

| + | |||

| + | == Source [[API]] == | ||

| + | |||

| + | {{Truffle|com/oracle/truffle/api/source|Source}} class was the central point of [[Truffle] source [[API]]. As its name suggests the [[API]] was created by Michael to encapsulate the sources. Usually I advocate to separate [[APIvsSPI]], but as the {{Truffle|com/oracle/truffle/api/source|Source}} class was '''final''' and just holding the code, I decided to keep it and use the same class for both the [[ClientAPI]] (e.g. {{Truffle|com/oracle/truffle/api/vm|PolyglotEngine}}) as well as [[ProviderAPI]] (e.g. {{Truffle|com/oracle/truffle/api|TruffleLanguage}}). As far as I can tell, this worked fine. | ||

| + | |||

| + | However once we realized that the source object is mutable - in case of a change of a file on disk, it could reload its content. That was wild: imagine a [[language]] parser having an [[AST]] build around the source buffer and the buffer suddenly changes. All character references are suddenly wrong! | ||

| + | |||

| + | This had to stop and thus I made everything immutable with the help of few newly invented [[API Design]] patterns: [[BuilderWithConditionalException]], [[ChameleonBuilder]], [[HiddenBuilder]] and [[WhiningBuilder]]. Innovation and [[design as a service]] at your service! | ||

| + | |||

| + | == [[Sigtest]] == | ||

| + | |||

| + | Essential part of developing [[API]] in a [[modular]] way is to keep [[backward compatibility]] - only then each downstream team can update to new version of your project on own independent schedule without the need to mutually orchestrate adjustments to incompatible changes across all teams. However it is hard to keep [[backward compatibility]] while relying only on one's cleverness. Just like people no longer test their programs only by proof-reading their source code, we shall use tools to test [[API]] compatibility: [[NetBeans]] had been using [[Sigtest]] for a long time and thus I decided to use it for [[Truffle]] project as well. | ||

| + | |||

| + | Details are given in the [[TruffleSigtest]] page, I just want to stress that running [[Sigtest]] isn't just about starting some job as part of the build process. It requires an infrastructure to keep daily and release [[API]] snapshots and continuously compare the status against the previous snapshots. I have integrated [[Sigtest]] into [[Truffle]] build infrastructure ('''mx''') and maintained necessary servers to give us early warnings in case of [[backward compatibility]] violations. | ||

| + | |||

| + | == [[Jackpot]] == | ||

| + | |||

| + | While [[backward compatibility]] is important, [[Truffle]] is also a young project that still wanted to perform necessary [[API]] fixes. To balance that I defined following compatibility policy: | ||

| + | ''an [[API]] element has to be deprecated first and only then removed in a subsequent release''. With this policy we could provide strong compatibility guarantee for our downstream teams. Warn them via deprecations and still have relatively stable development environment. To simplify switching from a deprecated [[API]]s, I convinced Jan Lahoda (master of anything [[AST]] related in [[Javac]] and [[NetBeans]] teams) to integrate his [[Jackpot]] project with '''mx''' build tool. In an ideal state it was just about typing '''mx jackpot --apply''' and the necessary changes to migrate from deprecated [[API]] to new replacement were done automatically in any code base downstream teams could have. | ||

| + | |||

| + | [[Jackpot]] was also useful when we decided to witch to [[JDK]]8, but wanted to avoid the startup overhead associated with [[Lamdas]]. It was possible to create a [[Jackpot]] rule that detects [[lamdas]] and fails the compilation. Knowing these tools can significantly improve [[evolution]] of your [[API]] and give users of the [[API]] more pleasant experience when consuming it. | ||

| + | |||

| + | == Javadoc == | ||

| + | |||

| + | The original '''mx javadoc''' support wasn't suitable for [[API]] development. It generated [[Javadoc]] for all project classes - however that isn't what one wants in case of [[API]]s. In an [[API]] some packages are public, some are an implementation detail. The [[Javadoc]] shall only be generated for the public ones. I addressed that in the [[Truffle]] project by modifying '''mx''' to pay attention to `package-info.java` file - if it was present, then the package was considered public (and included in the [[Truffle]] [[Javadoc]]) - all other packages were kept out of the sight. That contributed to quality of the documentation a lot. | ||

| + | |||

| + | Yet there still were problems. Each [[API]] contains sample snippets of code. Soon I realized that most of the snippets in the documentation are wrong, uncompilable or at least outdated. The solution is easy - make sure these snippets are compiled together with rest of your code - then they'd be always up-to-date. It is just necessary to extract them from the source code and insert them into the documentation. That is why I created [[Codesnippet4Javadoc]] doclet and integrated it with '''mx javadoc'''. Btw. similar technology was used when [[I]] was writing [[TheAPIBook]]. As such the [[Truffle]] samples in the [[Javadoc]] documentation can easily be made always correct and up to date. | ||

| + | |||

| + | Still, the [[Truffle]] [[API]] is huge (that is a common feature of almost all [[API]]s) and it is not easy to know where to start. To address this the [[Truffle]] [[Javadoc]] now opens with a page containing a prose tutorial text. Such text guides [[API]] users and introduces them various [[Truffle]] concepts. Only from there (and in text embedded code samples) one can navigate to the actual classes and their methods to find out details about calling conventions and other low level details. Such setup makes sure the tutorial text is always up-to-date with code samples and cross referenced with the classical part of the [[Javadoc]] documentation. | ||

| + | |||

| + | Setting this up was of course easy to do without any [[Domain Expert]] knowledge (except the need to read and change the enormous '''mx.py''' [[Python]] file). | ||

| + | |||

| + | == Node.js & Java Interop == | ||

| + | |||

| + | [[NodeJS]] and [[Java]] interaction has always been interesting to me since the days of ''avatar.js'' and [[I]] was thrilled to contribute to it when working on [[Truffle]]. The essential part is of course [[Java]] interop - by building it around ''typesafe view'' of any dynamic object from [[Java]] (see [https://graalvm.github.io/graal/truffle/javadoc/com/oracle/truffle/tutorial/embedding/package-summary.html#Access_guest_language_classes_from_Java example]) I tried to make the co-operation between [[Java]] and [[JavaScript]] as smooth as possible and natural for both sides. An internal [[APIUsabilityStudy]] revealed certain implementation glitches (especially with respect to higher order collections like ''List<Map<String,List<Integer>>>'' that required few fixes), but otherwise the [[API]] approach turned out to be quite usable for the early [[Truffle]] adopters. | ||

| + | |||

| + | Integration of [[NodeJS]] and [[Java]] isn't only about the calls, but also about tooling. How do you build your [[Java]] code? How do you launch your [[Java]] code in context of [[NodeJS]] then? How do you isolate your code from the [[Truffle]] language implementations? As part of this project [[I]] managed to find a way to use industry standard [[Maven]] build tool to work with [[Java]] sources, to launch the [[Java]]+[[NodeJS]] application and also how to hide all the [[language]] classes away from the end user application classpath by use of '''TruffleLocator''' class. | ||

| + | |||

| + | This required a bit of [[ClassLoader]] tricks, but that is something a former [[NetBeans]] architect shall know. Not much [[Truffle]] [[Domain Expert]]ise needed otherwise. | ||

| + | |||

| + | == Runtime Sharing of ASTs == | ||

| + | |||

| + | Designing [[API]] for sharing of [[AST]]s between different contexts/threads turned out to be a huge task. Without help of Christian - the [[Domain Expert]] - I couldn't even get started. But when we overcame the first obstacle - e.g. we found out how to keep reference to a context in the most effective way - the rest started to materialize itself. The trick was to create a group of {{Truffle|com/oracle/truffle/api/vm|PolyglotEngine}} instances that would share their settings. | ||

| + | |||

| + | However technical issues (especially with interop) hold me back. At the end Christian tried and got to a working state (while some of the issues persisted). Then I massaged the [[API]]s trying to remove all that seemed superfluous and at the end we got to state that I envisioned - I just had to expose the interface representing group of engines in the [[API]] as {{Truffle|com/oracle/truffle/api/vm|PolyglotRuntime}} - the rest stayed as planned. In spring 2017 we integrated the change. | ||

| + | |||

| + | Clearly without [[Domain Expert]] knowledge this task would not be finished yet - but that was more an implementation detail - the [[API]]s ended up as designed without proper domain knowledge. | ||

| + | |||

| + | = Can One [[Design API as a service]]? = | ||

| + | |||

| + | If we look back at the list of topics done during my [[TwoYearsWithTruffle]] we can see that many of them could be achieved without explicit [[Domain Expert]] knowledge. Things like setting up the infrastructure, polishing and publishing documentation, simplifying existing [[API]]s - all of that can be delivered as a service. Complex tasks however require some [[Domain Expert]] knowledge - and that is what I am going to do now - learn how to write a compiler! | ||

Current revision

In May 2015, when I joined OracleLabs and got a task to turn Truffle from a research system for writing fast AST interpreters into an industry ready framework, I wrote an essay called Domain Expert - asking whether one can design API as a service or whether one has to be a Domain Expert when designing an API. I feel it is a time for reflection and time to describe how my attempts to design as a service ended up.

Contents |

Polyglot Beginnings

One of the unique features of Truffle is its polyglot nature. The ability to freely mix languages like JavaScript, Ruby or R at full speed (as demonstrated by my sieve project) is clearly amazing. Most of that had already been written and published by Matthias Grimmer - when I joined, I just got a task to polish it into a formal API - exactly aligned with the vision of design as a service. Matthias had done a lot of work - he was able to run benchmarks written in all of the three scripting languages and also mix them with his own interpreter of C. That meant there was a lot of tests to run - just booting up the languages was complicated. Every language needed its own proprietary setup.

It was clear what I had to do: design a system of registering Truffle languages in a declarative way and initializing them in a uniform style. That was great, as my previous NetBeans_Runtime_Container API experience was all about registering and discovery of some services.

I just had to decide whether it is better to fit into an existing standard - e.g. implement ScriptEngine API - or whether it is better to design something from scratch. The dilemma is always the same - is it better to follow the standard and compromise on the things that don't fit in there (in the case mostly polyglot features) or design a new API that would match our needs 100%? Given the fact that polyglot features were the most important selling point, I went for a new API. That was probably good choice, but the missing support for ScriptEngine is still biting us from time to time and there is an open issue to provide such generic ScriptEngine implementation.

Anyway TruffleLanguage and PolyglotEngine were created and all Matthias's interop tests could be rewritten to use just these APIs and don't talk to each language directly. Good example that design as a service can work.

Testing Compatibility

Another thing that was more or less obvious was the need for compatibility between languages. There are two approaches to tackle that:

- testing from bottom

- testing from the top

Testing from the top simply means to write sample programs that mix and match languages and assert some output. Again the sieve project contains examples of that approach. However this can only test the languages one knows about (e.g. one is on top the chain of dependencies and knows them all). Matthias once created a matrix of an three parts of an algorithm written in different languages and then mixed them together in all possible combinations. That is a valid approach and certainly the right one when measuring the overall performance of the system. However it is a lot of work (that should be distributed among language writers) and the coverage isn't usually equally distributed among all languages.

While I value both approaches, I incline towards testing from bottom as that is the way to test multiple implementations of an abstract API: one writes a test compatibility kit (a TCK) that contains abstract test definitions with tasks that each language author needs to finish by writing suitable code snippet in own language to perform the given task. This approach scales much better and gives consistent coverage across all language implementations. Each time we detect a deviation from the expected polyglot behavior we add a test to TruffleTCK and all the language writers automatically benefit. Once they update to newer version of the TCK they are notified and can implement the newly desired functionality - and increase compatibility between all languages.

I'd say this part of my Truffle work went fine even I still wasn't a Truffle Domain Expert.

Polishing Interop

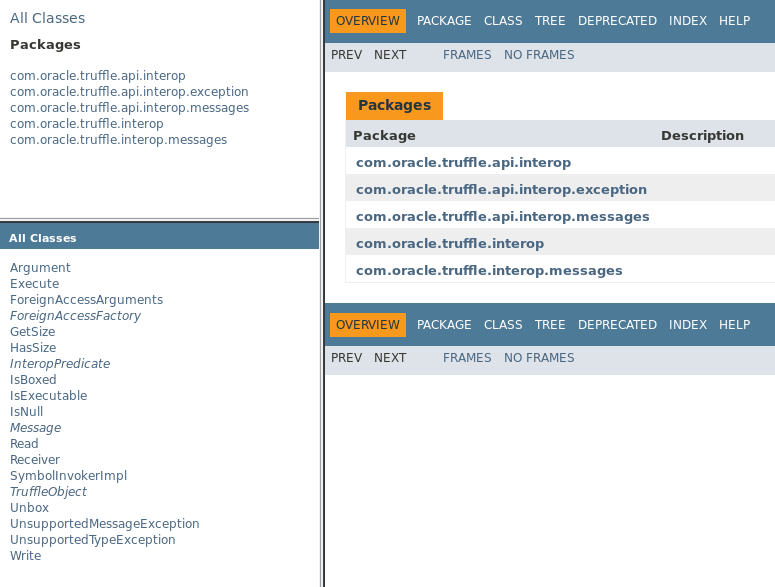

Truffle polyglot capabilities are based on so called interop API also created by Matthias. My task was to polish it. That required me to gain some Domain Expert knowledge, but not that much as the interop is a kind of isolated part of Truffle system that can be (to some extent) understood on its own. Moreover most of the work was in applying abstractions and hiding stuff that didn't have to be exposed as an API. Compare the original version

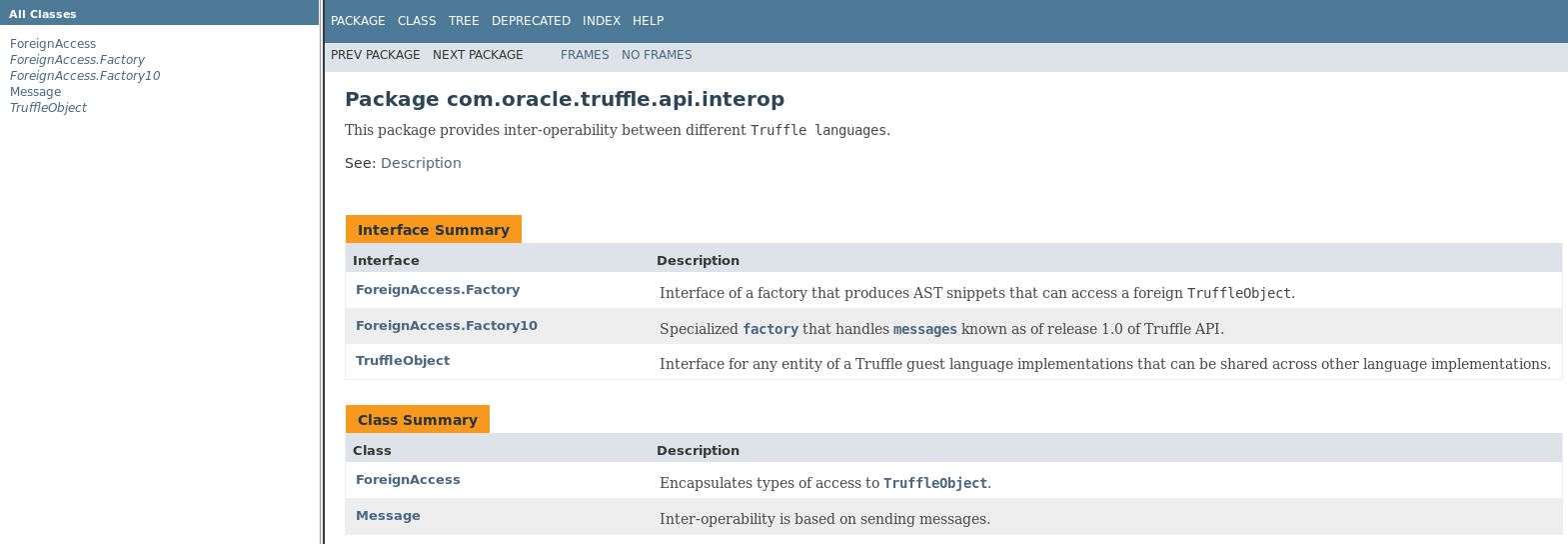

with the polished one. Instead of almost twenty classes split into five packages, there are just five classes in a single package. Just these five abstractions were enough to provide the same functionality - this is the kind of service you can expect when asking someone to design API as a service.

I was so proud that I even presented my result at a conference. Matthias was listening to my speech and felt ashamed. But there was no reason - I had kept 99% of original Matthias code - I'd just hidden it. APIs should act as a facade - they should hide the gory details of implementations in order to help users to stay clueless and still remain productive. There is no need to be ashamed, we all did good job (impl & API wise).

Over the next months we added more classes into the interop package to support exception handling, simplify writing of boiler plate code when resolving interop messages, etc. However it is always better to start with some smaller API and later expand it than trying to do it the opposite way.

Debugger

The Truffle team master of debugger related tasks was Michael Van De Vanter - author of revolutionary article Debugging at Full Speed and many others. Michael had an implementation of debugger for Truffle implementation of Ruby and JavaScript running and also the implementation for R was sort of working. However the situation was similar to Matthias polyglot attempts - each language needed its own setup to turn the debugger on. In addition to that the way to start debugging was quite different than the way to start regular execution (e.g. via PolyglotEngine). As a result one needed to decide ahead of time whether debugging will be needed or whether one wants just a plain execution. Of course this had to be unified.

After a bit of struggling I managed to reverse the dependency - e.g. one could always start the execution with PolyglotEngine and, if the debugger was initialized, debugging started automatically. Moreover I added few hooks that could be triggered via JPDA debugger protocol. Based on them Martin Entlicher (the NetBeans debugger guy) then implemented TruffleNetBeansDebugger. Since then NetBeans became able to debug any Truffle based language, step through the sources (even without the IDE understanding them), see stack variable, etc.

Of course the behavior was buggy and had to be tested. I was thinking of putting the test into TruffleTCK, but except one trivial case (using debugger to kill long running execution) the tests seemed too language specific to be tested from bottom. Thus I created SLDebugTest which verified that stepping over a factorial computation works in our testing Simple Language, shows proper values for local variables, etc. Other teams then copied the test and adopted it to their language. As far as I can tell then the consistency of debugger implementations increased.

This all was achievable without Domain Expert knowledge of Truffle. However there were few problems that had to be addressed:

- The AST instrumentation API was found to need to much memory overhead and real Truffle AST expert, Christian Humer had to be called to rewrite that

- Initial evaluation (that talked to a debugger) was slow - after weeks of struggling and help from Christian I finally learned enough about partial evaluation tricks (Assumption, CompilerDirectives.CompilationFinal) to speed it up

- Debugger could only be attached when new PolyglotEngine evaluation was about to start - again, it needed Christian's new instrumentation API to fix that up

These problems show the limits of design API as a service - designing an API worked, but making it fast required a lot of understanding of what happens under the cover.

Source API

Source class was the central point of [[Truffle] source API. As its name suggests the API was created by Michael to encapsulate the sources. Usually I advocate to separate APIvsSPI, but as the Source class was final and just holding the code, I decided to keep it and use the same class for both the ClientAPI (e.g. PolyglotEngine) as well as ProviderAPI (e.g. TruffleLanguage). As far as I can tell, this worked fine.

However once we realized that the source object is mutable - in case of a change of a file on disk, it could reload its content. That was wild: imagine a language parser having an AST build around the source buffer and the buffer suddenly changes. All character references are suddenly wrong!

This had to stop and thus I made everything immutable with the help of few newly invented API Design patterns: BuilderWithConditionalException, ChameleonBuilder, HiddenBuilder and WhiningBuilder. Innovation and design as a service at your service!

Sigtest

Essential part of developing API in a modular way is to keep backward compatibility - only then each downstream team can update to new version of your project on own independent schedule without the need to mutually orchestrate adjustments to incompatible changes across all teams. However it is hard to keep backward compatibility while relying only on one's cleverness. Just like people no longer test their programs only by proof-reading their source code, we shall use tools to test API compatibility: NetBeans had been using Sigtest for a long time and thus I decided to use it for Truffle project as well.

Details are given in the TruffleSigtest page, I just want to stress that running Sigtest isn't just about starting some job as part of the build process. It requires an infrastructure to keep daily and release API snapshots and continuously compare the status against the previous snapshots. I have integrated Sigtest into Truffle build infrastructure (mx) and maintained necessary servers to give us early warnings in case of backward compatibility violations.

Jackpot

While backward compatibility is important, Truffle is also a young project that still wanted to perform necessary API fixes. To balance that I defined following compatibility policy: an API element has to be deprecated first and only then removed in a subsequent release. With this policy we could provide strong compatibility guarantee for our downstream teams. Warn them via deprecations and still have relatively stable development environment. To simplify switching from a deprecated APIs, I convinced Jan Lahoda (master of anything AST related in Javac and NetBeans teams) to integrate his Jackpot project with mx build tool. In an ideal state it was just about typing mx jackpot --apply and the necessary changes to migrate from deprecated API to new replacement were done automatically in any code base downstream teams could have.

Jackpot was also useful when we decided to witch to JDK8, but wanted to avoid the startup overhead associated with Lamdas. It was possible to create a Jackpot rule that detects lamdas and fails the compilation. Knowing these tools can significantly improve evolution of your API and give users of the API more pleasant experience when consuming it.

Javadoc

The original mx javadoc support wasn't suitable for API development. It generated Javadoc for all project classes - however that isn't what one wants in case of APIs. In an API some packages are public, some are an implementation detail. The Javadoc shall only be generated for the public ones. I addressed that in the Truffle project by modifying mx to pay attention to `package-info.java` file - if it was present, then the package was considered public (and included in the Truffle Javadoc) - all other packages were kept out of the sight. That contributed to quality of the documentation a lot.

Yet there still were problems. Each API contains sample snippets of code. Soon I realized that most of the snippets in the documentation are wrong, uncompilable or at least outdated. The solution is easy - make sure these snippets are compiled together with rest of your code - then they'd be always up-to-date. It is just necessary to extract them from the source code and insert them into the documentation. That is why I created Codesnippet4Javadoc doclet and integrated it with mx javadoc. Btw. similar technology was used when I was writing TheAPIBook. As such the Truffle samples in the Javadoc documentation can easily be made always correct and up to date.

Still, the Truffle API is huge (that is a common feature of almost all APIs) and it is not easy to know where to start. To address this the Truffle Javadoc now opens with a page containing a prose tutorial text. Such text guides API users and introduces them various Truffle concepts. Only from there (and in text embedded code samples) one can navigate to the actual classes and their methods to find out details about calling conventions and other low level details. Such setup makes sure the tutorial text is always up-to-date with code samples and cross referenced with the classical part of the Javadoc documentation.

Setting this up was of course easy to do without any Domain Expert knowledge (except the need to read and change the enormous mx.py Python file).

Node.js & Java Interop

NodeJS and Java interaction has always been interesting to me since the days of avatar.js and I was thrilled to contribute to it when working on Truffle. The essential part is of course Java interop - by building it around typesafe view of any dynamic object from Java (see example) I tried to make the co-operation between Java and JavaScript as smooth as possible and natural for both sides. An internal APIUsabilityStudy revealed certain implementation glitches (especially with respect to higher order collections like List<Map<String,List<Integer>>> that required few fixes), but otherwise the API approach turned out to be quite usable for the early Truffle adopters.

Integration of NodeJS and Java isn't only about the calls, but also about tooling. How do you build your Java code? How do you launch your Java code in context of NodeJS then? How do you isolate your code from the Truffle language implementations? As part of this project I managed to find a way to use industry standard Maven build tool to work with Java sources, to launch the Java+NodeJS application and also how to hide all the language classes away from the end user application classpath by use of TruffleLocator class.

This required a bit of ClassLoader tricks, but that is something a former NetBeans architect shall know. Not much Truffle Domain Expertise needed otherwise.

Runtime Sharing of ASTs

Designing API for sharing of ASTs between different contexts/threads turned out to be a huge task. Without help of Christian - the Domain Expert - I couldn't even get started. But when we overcame the first obstacle - e.g. we found out how to keep reference to a context in the most effective way - the rest started to materialize itself. The trick was to create a group of PolyglotEngine instances that would share their settings.

However technical issues (especially with interop) hold me back. At the end Christian tried and got to a working state (while some of the issues persisted). Then I massaged the APIs trying to remove all that seemed superfluous and at the end we got to state that I envisioned - I just had to expose the interface representing group of engines in the API as PolyglotRuntime - the rest stayed as planned. In spring 2017 we integrated the change.

Clearly without Domain Expert knowledge this task would not be finished yet - but that was more an implementation detail - the APIs ended up as designed without proper domain knowledge.

Can One Design API as a service?

If we look back at the list of topics done during my TwoYearsWithTruffle we can see that many of them could be achieved without explicit Domain Expert knowledge. Things like setting up the infrastructure, polishing and publishing documentation, simplifying existing APIs - all of that can be delivered as a service. Complex tasks however require some Domain Expert knowledge - and that is what I am going to do now - learn how to write a compiler!